Empirical distribution function

In statistics, the empirical distribution function, or empirical cdf, is the cumulative distribution function associated with the empirical measure of the sample. This cdf is a step function that jumps up by 1/n at each of the n data points. The empirical distribution function estimates the true underlying cdf of the points in the sample. A number of results exist which allow to quantify the rate of convergence of the empirical cdf to its limit.

Contents |

Definition

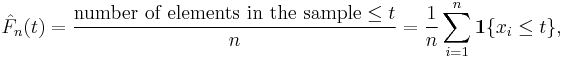

Let (x1, …, xn) be iid real random variables with the common cdf F(t). Then the empirical distribution function is defined as [1]

where 1{A} is the indicator of event A. For a fixed t, the indicator 1{xi ≤ t} is a Bernoulli random variable with parameter p = F(t), hence  is a binomial random variable with mean nF(t) and variance nF(t)(1 − F(t)). This implies that

is a binomial random variable with mean nF(t) and variance nF(t)(1 − F(t)). This implies that  is an unbiased estimator for F(t).

is an unbiased estimator for F(t).

Asymptotic properties

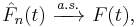

By the strong law of large numbers, the estimator  converges to F(t) as n → ∞ almost surely, for every value of t: [2]

converges to F(t) as n → ∞ almost surely, for every value of t: [2]

thus the estimator  is consistent. This expression asserts the pointwise convergence of the empirical distribution function to the true cdf. There is a stronger result, called the Glivenko–Cantelli theorem, which states that the convergence in fact happens uniformly over t: [3]

is consistent. This expression asserts the pointwise convergence of the empirical distribution function to the true cdf. There is a stronger result, called the Glivenko–Cantelli theorem, which states that the convergence in fact happens uniformly over t: [3]

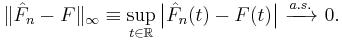

The sup-norm in this expression is called the Kolmogorov–Smirnov statistic for testing the goodness-of-fit between the empirical distribution  and the assumed true cdf F. Other norm functions may be reasonably used here instead of the sup-norm. For example, the L²-norm gives rise to the Cramér–von Mises statistic.

and the assumed true cdf F. Other norm functions may be reasonably used here instead of the sup-norm. For example, the L²-norm gives rise to the Cramér–von Mises statistic.

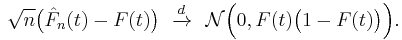

The asymptotic distribution can be further characterized in several different ways. First, the central limit theorem states that pointwise,  has asymptotically normal distribution with the standard √n rate of convergence: [2]

has asymptotically normal distribution with the standard √n rate of convergence: [2]

This result is extended by the Donsker’s theorem, which asserts that the empirical process  , viewed as a function indexed by t ∈ R, converges in distribution in the Skorokhod space D[−∞, +∞] to the mean-zero Gaussian process GF = B∘F, where B is the standard Brownian bridge.[3] The covariance structure of this Gaussian process is

, viewed as a function indexed by t ∈ R, converges in distribution in the Skorokhod space D[−∞, +∞] to the mean-zero Gaussian process GF = B∘F, where B is the standard Brownian bridge.[3] The covariance structure of this Gaussian process is

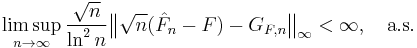

The uniform rate of convergence in Donsker’s theorem can be quantified by the result, known as the Hungarian embedding: [4]

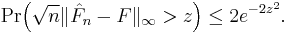

Alternatively, the rate of convergence of  can also be quantified in terms of the asymptotic behavior of the sup-norm of this expression. Number of results exist in this venue, for example the Dvoretzky–Kiefer–Wolfowitz inequality provides bound on the tail probabilities of

can also be quantified in terms of the asymptotic behavior of the sup-norm of this expression. Number of results exist in this venue, for example the Dvoretzky–Kiefer–Wolfowitz inequality provides bound on the tail probabilities of  : [4]

: [4]

In fact, Kolmogorov has shown that if the cdf F is continuous, then the expression  converges in distribution to ||B||∞, which has the Kolmogorov distribution that does not depend on the form of F.

converges in distribution to ||B||∞, which has the Kolmogorov distribution that does not depend on the form of F.

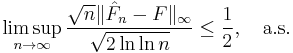

Another result, which follows from the law of the iterated logarithm, is that [4]

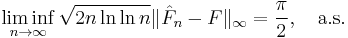

and

See also

- Càdlàg functions

- Empirical probability

- Empirical process

- Kaplan Meier for censored processes

- Strassen’s theorem

- Survival function

- Dvoretzky–Kiefer–Wolfowitz inequality

References

- Shorack, G.R.; Wellner, J.A. (1986). Empirical processes with applications to statistics. New York: Wiley.

- van der Vaart, A.W. (1998). Asymptotic statistics. Cambridge University Press. ISBN 978-0-521-78450-4.

Notes

- ^ van der Vaart (1998, page 265), PlanetMath

- ^ a b van der Vaart (1998, page 265)

- ^ a b van der Vaart (1998, page 266)

- ^ a b c van der Vaart (1998, page 268)

![\mathrm{E}[\,G_F(t_1)G_F(t_2)\,] = F(t_1\wedge t_2) - F(t_1)F(t_2).](/2012-wikipedia_en_all_nopic_01_2012/I/64dd9800d6b1631151d17bc7d1453262.png)